The aim of this project is to design and train a novel Artificial Neural Network (ANN): LiDomNet. The goal of this network is to recover odometry (i.e., a quaternion describing rotation and a traslation vector) corresponding to movement between two consecutive 3D point-clouds. For this purpose, we have used public KITTI dataset which provides, among other data, measurements from a 3D LiDar with 64 beams, attached on the top of a moving car. In addition, they provide real data of car’s movement gathered with an Inertial Measurement Unit (IMU). This project is yet active.

The SIAR project will develop a fully autonomous ground robot able to autonomously navigate and inspect the sewage system with a minimal human intervention, and with the possibility of manually controlling the vehicle or the sensor payload when required. The project uses as starting point IDMind’s robot platform RaposaNG. A new robot will be built based on this know-how, with the following key steps beyond the state of the art required to properly address the challenge: a robust IP67 robot frame designed to work in the hardest environmental conditions with increased power autonomy and flexible inspection capabilities; robust and increased communication capabilities; onboard autonomous navigation and inspection capabilities; usability and cost effectiveness of the developed solution. UPO leads the navigation tasks on the project

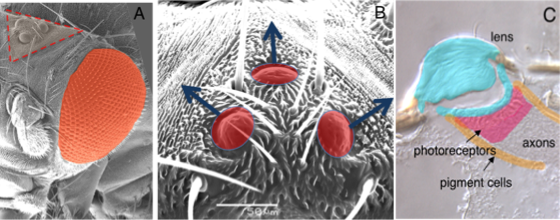

Micro unmanned Aerial Vehicles (MAVs) may open up a new plethora of applications for aerial robotics, both in indoor and outdoors scenarios. However, the limited payload of these vehicles limits the sensors and processing power that can be carried by MAVs, and, thus, the level of autonomy they can achieve without relying on external sensing and processing. Flying insects, like Drosophila, on the other hand, can carry out impressive maneuvers with a relatively small neural system. This project will explore the biological fundamentals of Drosophilas ocelli sensory-motor system, one of the mechanisms most likely related to fly stabilization, and the possibility to derive new sensing and navigation systems for MAVs from it.The project will do it by a complete reverse engineering of the ocelli system, estimating the structure and functionality of its neural processing network, and then modeling it through the interaction of biological and engineering research. Novel genetic-based neural tracing methods will be employed to extract the topology of the neural network, and behavioral experiments will be devised to determine the functionalities of specific neurons. The findings will be used to derive a model, and this model will be used to characterize the relevant aspects from the point of view of estimation and control. The model will also serve to determine the adaptation of this sensory-motor system to current MAV platforms, and to design of a proof of concept sensing and navigation system.

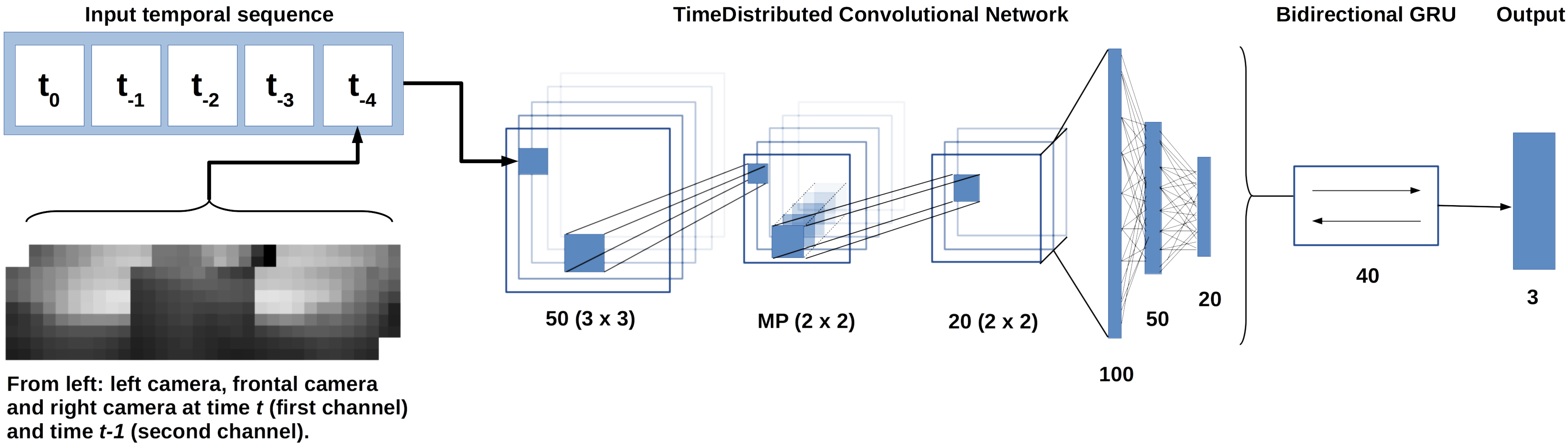

Abstract— In this work we present a bioinspired visual system sensor to estimate angular rates in

unmanned aerial vehicles (UAV) using Neural Networks. We have conceived a hardware setup to emulate Drosophila’s ocellar system, three simple

eyes related to stabilization. This device is composed of three low resolution cameras with a similar spatial configuration as the ocelli.

There have been previous approaches based on this ocellar system, most of them considering assumptions such as known light source direction or a punctual

light source. In contrast, here we present a learning approach using Artificial Neural Networks in order to recover the system’s angular rates indoor and

outdoor without previous knowledge. A classical computer vision based method is also derived to be used as a benchmark for the learning approach. The method

is validated with a large dataset of images (more than half a million samples) including synthetic and real data. The source code of the algorithms and

the datasets used in this paper have been released in an open repository.

[PDF] [Code] [Dataset] [BibTeX]

- Holder

Abstract— This paper presents a bioinspired system for attitude rate estimation using visual sensors for aerial vehicles. The sensorial system consists

of three small low-resolution cameras (10x8 pixels), and is based on insect ocelli, a set of three simple eyes related to flight stabilization. Most previous approaches

inspired by the ocellar system use model-based techniques and consider different assumptions, like known light source direction. Here, a learning approach is employed,

using Artificial Neural Networks, in which the system is trained to recover the angular rates in different illumination scenarios with unknown light source direction.

We present a study using real data in an indoor setting, in which we evaluate different network architectures and inputs.

[PDF]

- Holder